Barney the purple robot dinosaur and the importance of being embodied in AI

Elon Musk's worst nightmare and more robot events coming up

The Open X-Embodiment (OXE) Repository with over 1 million real robot trajectories spanning 22 different robot embodiments is a gift that is going to keep giving! I can’t wait to see the research generated from this, as the original researchers are already finding better performance and improved generalization in the RT-1-X and RT-2-X models. A general purpose robotics model might be on it’s way!

Which is way more exciting than the incredible hype created by the purple robot dinosaur blob ‘designed by AI’! I won’t say the original researchers deliberately went for headlines, however calling this “the birth of a brand-new organism” is bullshit, and implies that this isn’t an ongoing field of research, with many more interesting results achieved by others in the past. (recent example from ASU but see also Ben Heni-Amor’s work, or the work of Hod Lipson, etc.)

I don’t like paywalling posts, as in last week’s IROS post and a few more coming up, so the paywall goes away in a few weeks, leaving the posts free to read. But if you are impatient and subscribe to this substack, it makes you an angel investor in the work I do at Silicon Valley Robotics as a startup advisor, and accelerator.

Many many thanks to all who subscribed this week!!!

Quick Reads:

Elon Musk’s worst nightmare - Business Insider

Joseph F. Engelberger Foundation Donates $20,000 to The Joanne Pransky Scholarship Funded by Women in Robotics - A3 Association for Advancing Automation

Formant Raises $21M Funding To Support Explosive Enterprise Growth - Formant

Operationalizing Robot Fleets at Scale: Our Lead Investment in Formant - BMW i Ventures

The robots are coming to collaborate with you - WSJ

Robots, AI, and the future of labor: an economic opportunity ‘way bigger than the steam engine’ - GeekWire

Neura Robotics brings in another $16M - The Robot Report

Archer Secures $65 Million in Financing for ‘World’s Largest’ eVTOL Production Plant - Flying

Elroy Demos Autonomous Cargo Capability To USAF - Aviation Week

The US Company Will Build ZF’s Autonomous Shuttle - Autoweek

China gives Ehang the first industry approval for fully autonomous, passenger-carrying air taxis - CNBC

Driverless taxi chaos in San Francisco erodes public trust in autonomous technology - ABC News

Didi’s autonomous vehicle arm raises $149M from state investors - TechCrunch

We are decades off having self-driving cars that have the freedom to go anywhere autonomous vehicle boss says - Business Insider

Autonomous Vehicles Are Driving Blind - NYTimes

Saronic brings in $55M for autonomous vehicles - The Robot Report

CEVA Logistics rolls out Boston Dynamics Stretch and Spot robots at LA warehouse - DCVelocity

Domino’s CTO on Microsoft AI Partnership, Future of Autonomous Delivery - Food on Demand

Honda introduces prototype electric autonomous work mower at Equip Exposition 2023 - Honda

FBR prepares to roll out Hadrian bricklaying robot in the U.S. - The Robot Report

Transportation and logistics sector drove rising robot sales in 2022 - Supply Chain Quarterly

Ag-tech startup Aigen, which sells solar-powered weed-thumping robots, lands $12M - GeekWire

Talking farming robots with Muddy Machines at the Goodwood Festival of Speed - AgFunder

Robotics Digitization Startup Ripcord Seeks $25 Million in Funding - Robots.Net

Microsoft to create team dedicated to data center automation and robotics - DCD

Seeing Robotics and Machine Vision as Dynamical Systems - EETimes

The real bionic woman is first to have robotic limb merged with bone and controlled with her mind - NYPost

Rosie Attwell’s journey from art to automation - CSIRO

Robotics Events:

Maker Faire is back in the Bay Area at Mare Island Oct 13-15 and Oct 20-22 (I’m giving a talk on Building the Future with a New Breed of Robots at midday on Sunday Oct 22)

18-22 October - ROSCon + PX4 Autopilot Developer Summit - New Orleans and virtual from Open Robotics (save $100 by registering for both events)

25 October - The Food AI Summit - Alameda CA from The Spoon

26 October - Tech Together: Bridging Communities - Mountain View from InOrbit

30 October - 3 Nov - ICAM, the International Conference on Advanced Manufacturing - Washington DC from ASME

3 Nov - AI x Robotics Hackathon - SF Schematic Ventures

6-9 Nov - Future in Review - Terranea CA from The Strategic Review (I’m hosting a panel with Agility Robotics, Apptronik and 1x)

Want to attend more science based robotics conferences?

CORL 2023 Atlanta GA 6 Nov - 9 Nov

Humanoids 2023 Austin TX 11 Dec - 14 Dec

HRI 2024 Boulder CO 11 Mar - 14 Mar

Haptics 2024 Long Beach CA 7 Apr - 10 Apr

Robosoft 2024 San Diego CA 14 Apr - 17 Apr

ICRA 2024 Yokohama Japan 13 May - 17 May 2024

ARSO 2024 Hong Kong, China 20 May - 22 May 2024

AIM 2024 Boston MA 14 July - 18 July

CASE 2024 Puglia Italy 28 Aug - 1 Sep 2024

IROS 2024 AbuDhabi 14 Oct - 18 Oct 2024

DARS 2024 New York 28 Oct - 30 Oct 2024

Humanoids 2024 Nancy FR 26 Nov - 28 Nov

ICRA 2025 Atlanta Georgia 17 May - 23 May 2025

Deep Reads:

Missing AI Leadership on Labor Policy - Betsy Masiello Proteus Strategies

New Report Identifies Research Priorities to Revitalize Supply Chain and Manufacturing Sector - ERVA NSF Engineering Research Visioning Alliance

Interesting Robots:

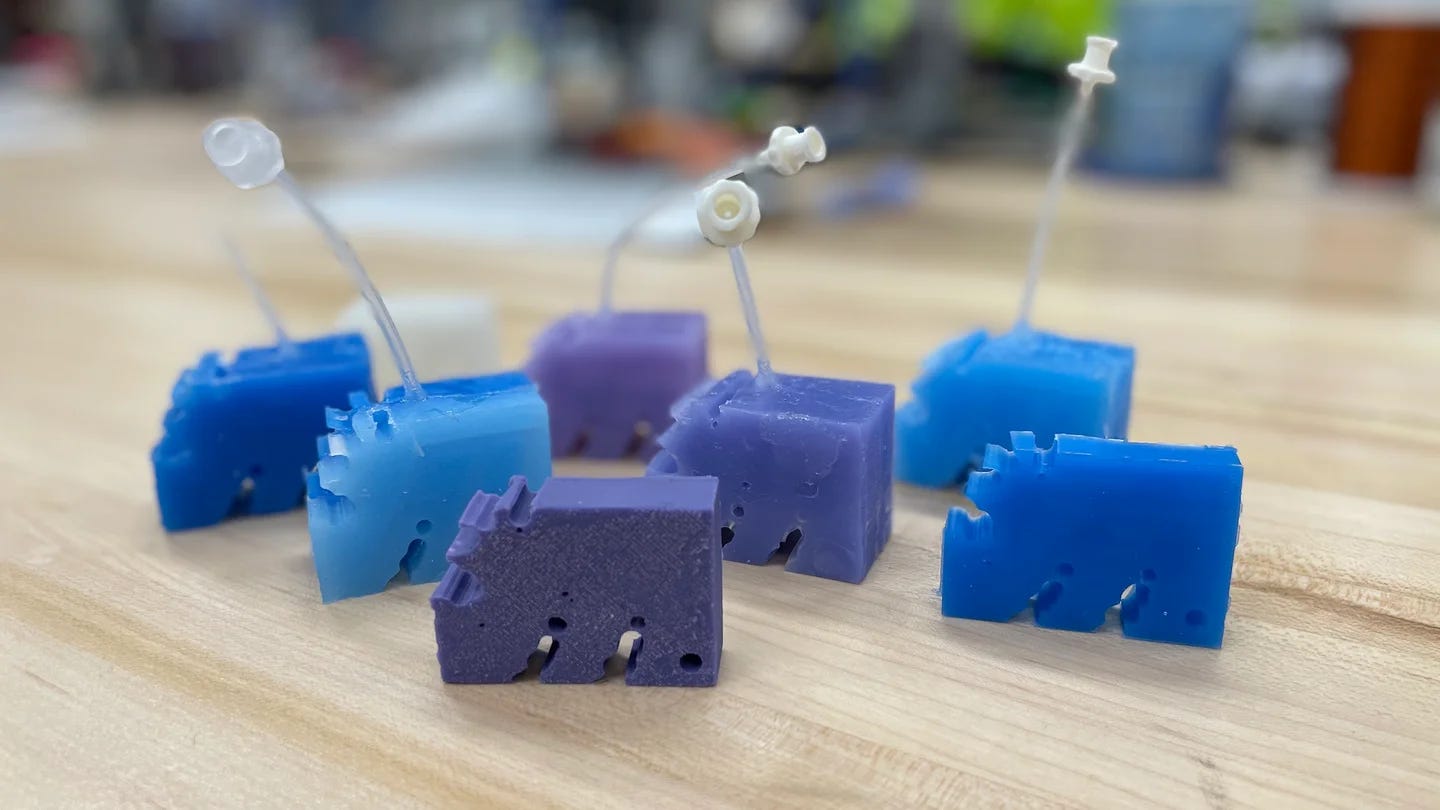

Open X-Embodiment Dataset: Collecting data to train AI robots

Datasets, and the models trained on them, have played a critical role in advancing AI. Just as ImageNet propelled computer vision research, we believe Open X-Embodiment can do the same to advance robotics. Building a dataset of diverse robot demonstrations is the key step to training a generalist model that can control many different types of robots, follow diverse instructions, perform basic reasoning about complex tasks, and generalize effectively. However, collecting such a dataset is too resource-intensive for any single lab.

To develop the Open X-Embodiment dataset, we partnered with academic research labs across more than 20 institutions to gather data from 22 robot embodiments, demonstrating more than 500 skills and 150,000 tasks across more than 1 million episodes. This dataset is the most comprehensive robotics dataset of its kind.

RT-X: A general-purpose robotics model

RT-X builds on two of our robotics transformer models. We trained RT-1-X using RT-1, our model for real-world robotic control at scale, and we trained RT-2-X on RT-2, our vision-language-action (VLA) model that learns from both web and robotics data. Through this, we show that given the same model architecture, RT-1-X and RT-2-X are able to achieve greater performance thanks to the much more diverse, cross-embodiment data they are trained on. We also show that they improve on models trained in specific domains, and exhibit better generalization and new capabilities.

To evaluate RT-1-X in partner academic universities, we compared how it performed against models developed for their specific task, like opening a door, on corresponding dataset. RT-1-X trained with the Open X-Embodiment dataset outperformed the original model by 50% on average.